.png)

Stress can be overwhelming, and coping with it can be tougher than you think. While venting your emotions might do the trick, not everyone is readily available to lend you a listening ear. This is why people have been gradually turning to AI as a plausible outlet. As technology advances and AI becomes more prominent in daily life, more individuals are now using AI chatbots as crutches to overcome their mental health issues. From simple symptom-related queries to even seeking medication suggestions, the AI frenzy has expanded beyond just sharing troubled emotions to using chatbots as personal therapists. Although being ‘best friends’ with an AI chatbot may seem like a shortcut to quick relief, it can easily turn into a double-edged sword, causing more harm than good in the long run if not used carefully.

Synopsis

The Rising Dependence on AI Chatbots

While symptom-searching on Google was once considered a toxic trait of a hypochondriac, the introduction of advanced AI platforms like ChatGPT, Perplexity, and Gemini has taken this to a whole new level. People have begun using these chatbots to discuss their personal lives, health concerns, day-to-day shortcomings, and even relationship issues.

By nature, human beings long for a sense of presence, understanding, and connection. Having one of these apps installed on their phones provides individuals with easy, round-the-clock access to a readily available virtual companion, even while on the go. What is perceived as empathy is actually a simulation driven by data patterns rather than genuine emotional understanding. The positive, non-judgemental advice these chatbots offer blankets individuals with a false sense of hope, safety, validation, and reassurance that they deeply crave. This is what drives them to continue relying on these tools as mental health support systems, despite being fully aware that they are non-sentient entities programmed to simulate empathy and understanding. When emotional needs are met symbolically, the brain treats it as real support it I also called anthropomorphism

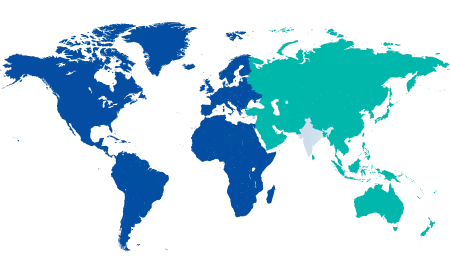

According to a 2024 study by NIH, about 28% of surveyed individuals reported using AI for ‘quick support and as a personal therapist.’ Meanwhile, a WHO Special Initiative for Mental Health study found that approximately 85% of individuals with mental disorders worldwide do not receive treatment. As the mental health crisis becomes a growing global challenge, this lack of access to timely treatment is encouraging more individuals to pursue AI chatbots as an alternative source of support.

Pros and Cons of This Trend

Based on their extent of use, relying on AI chatbots for mental health support has a plethora of advantages and limitations.

Some of the key advantages include:

Availability: Anxiety and depression can strike at any time of the day or night. Since AI chatbots are available round-the-clock, they are easily accessible to people who are experiencing sudden stress either at work, while outdoors, or during a sleepless night at home. These chatbots also prove beneficial to those who are hesitant to attend in-person sessions due to either location-based barriers or a busy schedule. However, mental health support is becoming more accessible through pro bono therapists, government helplines, NGO-based services, and professionally designed venting platforms, making human support more reachable than ever.

Affordability: Therapy and counselling sessions can be expensive and aren’t affordable for a major portion of the general population. By offering general advice and suggesting coping techniques, chatbots serve as a cost-effective alternative to people who are unable to afford traditional means of therapy.

De-stigmatization: Some mental health issues are heavily stigmatized, pushing patients away from seeking treatment. In such cases, chatbots offer a safe, private space to share their troubles without the fear of being judged.

Unbiased opinions: It is human nature to grow impatient with individuals who are sharing their problems, especially when they are dealing with issues such as loneliness, phobias, or addiction. People frequently find themselves feeling judged or misunderstood by those in their lives. In circumstances like this, chatbots present themselves as a helpful alternative, offering unbiased advice that remains neutral in both tone and emotion.

Effective integration: Numerous AI chatbots, particularly those that have been developed by hospitals or clinics as part of their mental health support system, effectively incorporate therapeutic techniques such as cognitive behavioral therapy (CBT) and dialectical behavior therapy (DBT) that prove useful in treating conditions such as anxiety and depression.

Some of the major concerns include:

Emotional disconnect: AI chatbots are programmed to replicate human emotions but cannot provide the same level of understanding, connection, and emotional depth that human therapists are capable of offering. This obvious lack of empathy makes it hard for chatbots to offer effective solutions for complex mental health issues.

Limited interventions for complex issues: Some mental health conditions, such as schizophrenia and severe depression require the care and expertise of trained professionals. Chatbots can only provide general advice and coping strategies with little to no intervention, making them incapable of delivering comprehensive support.

Inaccurate diagnosis: Every individual experiences unique symptoms that cannot be accurately interpreted by AI chatbots. This is because they are programmed to provide preset responses to prompts, leading to potential inaccuracies in the diagnosis and advice they offer.

Privacy risks: Although most chatbots claim to be encrypted, privacy breaches remain a possibility. Sharing deeply personal information can therefore pose a threat to the user’s online safety.

Overdependence on technology: The convenience and ease of using AI chatbots can cause individuals to grow too comfortable, preventing them from seeking more detailed support through experts and qualified mental health professionals. Moreover, chatbots require access to a device as well as stable internet connectivity, something that non-tech-savvy individuals and those lacking reliable connectivity may find challenging.

Navigating Ethical Loopholesreligion

Although AI chatbots recommend evidence-based psychotherapy techniques, they pose several ethical concerns:

- Absence of situational awareness: As chatbots rely on pre-programmed replies, they offer generic advice without considering an individual’s life experiences.

Discrimination: Chatbots don’t embrace individuality and often provide biased responses concerning gender, and cultural contexts.

-

Lack of empathy: Chatbots are programmed to be polite, creating a false sense of connection between the bot and the user.

-

Encouraging false beliefs: Chatbots are trained to maintain a supportive tone and validate users through algorithm-based responses, even if their beliefs are actually distorted or false. Unlike an actual therapist, chatbots cannot challenge users into thinking differently.

-

Safety issues: Rather than denying support on sensitive topics, chatbots that aren’t carefully monitored may instead encourage vulnerable people into making harmful choices.

Towards the healthier Use of AI in Mental Health Support

If used cautiously, AI chatbots can serve as valuable tools to individuals struggling with mental health conditions. Rather than completely replacing psychologists, counselors, and human therapists, chatbots should instead be integrated into the treatment plan. Several psychologists and therapists encourage users to use chatbots to track their mood and journal their thoughts between sessions. This practice plays an essential role in therapy, as it helps monitor patients’ progress over time.

Some chatbots developed by hospitals, clinics, and credible healthcare websites are specifically designed to aid people with mental health conditions and are generally considered safe to use. These systems are pre-programmed with certain prompts and keywords associated with severe depression or suicidal ideation that are carefully monitored and filtered to ensure user safety. Unlike generic chatbots, such platforms allow users to directly get in touch with a therapist when necessary or are re-directed to one if they are detected using concerning language.

Conclusion

While AI chatbots are a convenient, accessible, and inexpensive means of obtaining mental health support, they can do more harm than advantageous not used carefully and wisely. To date, there are some in-app tutorials, training programs and informational articles available from reputable sources online that help spread awareness and educate users on the safe and healthy usage of AI chatbots. It is crucial for individuals to learn to identify genuine and reputable apps before utilising them for their personal benefit.

Ultimately, individuals should view chatbots as companions and stepping stones toward seeking care from mental health professionals, rather than substitutes.

FAQ's

Mental health support is not widely accessible or affordable to a majority of people across India and the world, resulting in their increased reliance on AI chatbots for quick and easy relief. These chatbots are readily available and provide a 24/7 stigma-free space to share emotions, making them appealing to individuals who feel uncomfortable discussing personal issues with others.

Using AI chatbots as companions for advice on simple day-to-day issues can be healthy, but depending on them for medication advice or as a primary source of comfort over human connection can be destructive. Due to their lack of emotional intelligence and clinical judgment, chatbots can misinterpret sensitive situations and create risk for individuals in crisis from their mental health condition.

AI chatbots provide non-judgemental advice, which can be useful to manage day-to-day emotions. However, the accuracy of information provided by these platforms is always in question, making them a not-so-safe and reliable source while making important decisions. Excessive reliance on chatbots could also drive people away from real human contact, resulting in emotional isolation.

No, AI chatbots are just machines that have been programmed to simulate human emotion and empathy but can in no way replicate the human sensitivity, ethical understanding, and personalized insight that psychologists and human counsellors can provide.

How can AI chatbots be used responsibly to help a person in crisis during a mental health emergency?

Nowadays, several hospitals and medical platforms have created their own AI chatbots, specifically designed to help patients seek authentic medical advice and connect with psychologists for guidance. These can typically be safe to use. Yet, when in the midst of a serious mental health issue or an emergency, it is strongly recommended to consult a qualified psychologist or mental health professional instead of depending solely on an AI chatbot.

7 Min Read

7 Min Read